What does a “data architect” do? This research paper quotes some answers found in the job market.

Preface: Data architecture in the domains and levels of enterprise architecture

Business planning is what business directors and business planners do.

Business planning is informed and supported by business system planning, which enterprise and business architects do.

Up the 1970s, business systems were often analysed and designed by people in an Operational Research department.

At the start of the Information Age, many Operational Research departments were absorbed into IT departments.

Because IT departments were created to support and enable business processes that create and use business data.

In the 1980s, Enterprise Architecture (EA) emerged.

It grew out of IBM’s “business system planning”, James Martin’s “information engineering” and other approaches.

All these approaches urged enterprises to take a strategic and cross-organizational view of business processes and data.

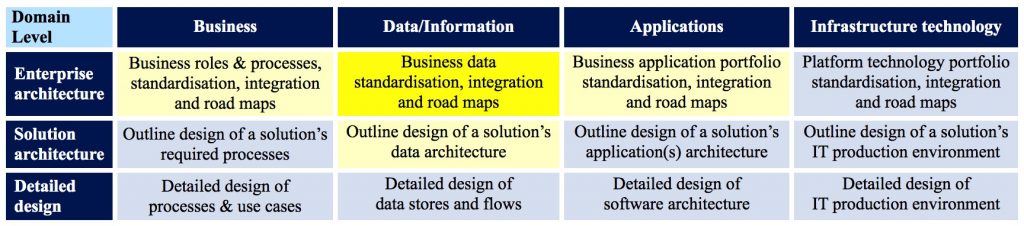

And since the PRISM report of 1986, architecture has been divided into four domains – reflected in the columns of the table below.

The table below positions EA as a high-level cross organisational and strategic view of business systems.

And it positions data architecture in the yellow boxes.

Every enterprise has to work out for itself how its roles cover the cells of the table.

It is usually expected that data architects are concerned with:

- Data stores (data at rest)

- Data flows (data in motion)

- Data qualities

Enterprise-level data architects set general principles and standards and govern the use of data by architects at lower levels.

They analyse and design the Enterprise Data Architecture, including some or all of OLTP, OLAP, Data Warehouse, Document Stores, Big Data,

And address Information Life Cycles.

Solution/software level data architects are concerned with the data needed to support specific solutions and applications.

Example role definitions

Example 1 – enterprise level

“Leading bank is urgently seeking a proven EA to

- engage and lead IT projects including

- the Enterprise Information Architecture

- information models and flows,

- data dictionaries, data standards

- data quality standards and processes

- develop and maintain the logical Enterprise Information Architecture that enables seamless information interoperability of all Bank systems for efficiency and cost-effectiveness.”

Example 2 – enterprise level

“Drive toward strategic Architecture goals and objectives by enhancing the coherence, quality, security and availability of the organization’s data assets through the development and deployment of Architecture roadmap.

Be primary advocate of standards, data modeling and data processing artefacts and guide data architecture work across multiple projects.

Provide strategic architectural oversight on assigned projects, including development of data model, interface and migration formats.

Key responsibilities

- Define and implement overall data modelling standards, guidelines, best practices and approved modelling techniques and approaches

- Plan, document and present enterprise data architecture-related strategies such as structured, unstructured and metadata

- Supervise the creation of physical and logical data models in the assigned project

- Support the development of the IT Strategies and their alignment with the Business Strategies

- Drive best practices in relation to data security, backup, disaster recovery, business Continuity and archiving.

- Lead the reviews of project Architecture Definition Documents and related artifacts to ensure that projects align their design to agreed and appropriate architectures

- Drive best practice in regards to reference / master data, transactional processing systems, data warehouse, business intelligence, and reporting technologies

- Create and communicate data architecture, data quality, and data integration policies, standards, guidelines, and procedures throughout the organization.

- Create and maintain data environment performance attributes in order to ensure optimal performance of the organization’s data environment

- Participate in all data integration and enterprise information management (EIM) programs and projects by rationalizing data processing for reusable module development

- Select and implement the appropriate tools, software, applications, and systems to support data technology goals.

- Act as liaison to vendors and service providers to select the products or services that best meet company goals and requirements

- Oversea / provide specifications for the mapping of data sources, data movement, Interfaces and data models with the goal of ensuring data quality and loose coupling of applications through canonical standards and mediation technologies

- Collaborate with project managers, address data related problems in regards to systems Integration and compatibility

- Drive development and use of data / metadata assets such as domain models, general data models (ERDs), Semantic layers (e.g. Cognos Framework) and messaging payload standards

Required experience

- Extensive experience of the Data Architecture discipline in a global organisation in 40+ locations with multiple corporate entities

- Extensive data modelling, manipulation and rationalisation experience within complex environments.

- Broad and in-depth experience of complex data environments design

- Considerable experience of SOA based Enterprise Data architectures

- Experience of MDM is a definite advantage.

- Proven expertise on RDBMS – Oracle Desirable; SQL/Server and DB2 required

- Proven expertise on Data appliances – Netezza, Teradata

- Knowledge of web services and XML messaging including XSD, WSDL, SOAP and REST is desirable.

- Experience in data migration and ETL – knowledge of Informatica desirable.

- Ability to conduct research into emerging technologies and trends, standards, and products as required

Data concerns

Industry experts contend that every organisation, regardless of sector, has data quality issues.

Modern organisations produce and consume large amounts of data.

Years ago the challenge was to cope with the quantity of data.

Today, the challenge is one of improving and maintaining the quality of the data.

Data quality issues

Three speakers at a meeting of BCS DMSG [datamanagementmem@lists.bcs.org.uk]

Ensuring “fit for purpose” master data through business engagement

- The aim of master data management is to deliver a “trusted” source of master data across an organisation or business unit.

- Business engagement, data quality management and data governance are critical

A balanced approach to scoring data quality

- Data profiling measures data quality against a number of data dimensions [including] metrics related to customer, processes and financial performance.

The impact of user behaviours on data quality

- Users can introduce data errors which slowly degrade the quality of data to the point where it is no longer fit for purpose.

Data profiling

This quote is from http://information-builders.msgfocus.com

Find out how to:

- Reduce the cost of bad data learning to cleanse improve the overall quality levels of your data

- Improve the quality of your business decisions by maintaining the data quality within your company in real-time

- Increase competitive advantage by gaining further customer insight, ensuring data from different systems is integrated into one common enterprise-wide data platform

- Create a single view of customers and your products by implementing the foundations for an enterprise information management initiative that allows you to optimise overall performance

- Benefit from a complimentary Data Profiling: provide us with a sample of your data and allow us to uncover data-quality issues and provide you with a detailed report.

Semantic interoperability

This quote from www.eitaglobal.com

“In an economy where a company’s business network of suppliers, distributors, partners, and customers is an increasingly important source of competitive advantage,

semantic interoperability – the ability of human and automated agents to coordinate their functioning based on a shared understanding of the data that flows among them –

is a major economic enabler.

Consequently, semantic interoperability problems drive up integration costs across industry.”

Canonical data models

Canonical data models define standard types and formats for data items and data structures in messages.

This quote is from Pete O’Dell “Silver Bullets”.

A short tour of the non-technical industry efforts to create a common XML-based vocabulary for specified purposes and industries.

- Astronomy. See http://fits.gsfc.nasa.gov.

- Built environment, and infrastructure systems integration. See www.obix.org.

- Distribution/Commerce. See www.rosettanet.org.

- Education. See www.sifinfo.org.

- Financial reporting. See www.xbrl.org.

- Financial research. See www.rixml.org.

- Food. See www.mpxml.org.

- Healthcare. See www.hl7.org.

- Information technology architecture. (opengroup.org)

- Instruments. See www.nasa.gov

- Insurance. See www. acord.org.

- Legal. See www.legalxml.org.

- Manufacturing. See www.pslx.org.

- News. See www.iptc.org.

- Oil and gas. See www.pidx.org.

- Publishing. See www.oasis.org.

- Real Estate. See www.RETS.org.

- Research. See www.casrai.org.

- Telecommunications. See www.atis.org. + TMF

Other canonical data models include:

- FPML (financial products)

- FIXML (financial instruments)

- OASIS: Names? Addresses?

- Open Travel Alliance (OTA): cars, hotels, insurance, airports, currencies, countries

- Air travel – PNR passenger name record

- ARTS (association of retail, textile…)

- Open Geospatial Consortium (OGC)

- JISC – universities

- TransXchange SIRI

- TRANSMODEL

转:Data architect roles

http://grahamberrisford.com/01EAingeneral/EAroles/Data%20architect%20roles.htm